LMS

The least mean square (LMS) neuron allows users to implement simple least mean square type networks outside of a subnetwork. This can also be done within a subnetwork--see LMS Network. More information on this rule and its history are available at the LMS Network page.

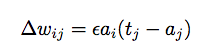

When an LMS neuron is updated, all of the synapses which attach to it are updated using the LMS rule. The LMS rule works as follows. The change in a weight is equal to the product of a learning rate ε, the pre-synaptic neuron activation, and the difference between the post-synaptic activation aj and a desired activation tj.

The error is the difference between the desired and actual activation of the target neuron. Repeated application of this rule mimizes reduces the error.

Desired activation tj is supplied by a neuron connected to a given LMS neuron by a signal synapse. This neuron can be thought of as a "teacher" neuron. Since these synapses are colored differently, it should be obvious which neuron is the teacher. If there is no such neuron, then incoming weights are not updated. If there is more than one teacher, one will be chosen arbitrarily.

An LMS neuron or collection of neurons can be used instead of an LMS Network when the user wants to have greater control over the training signal.

Learning Rate: Learning rate is denoted by ε in the equation above. This parameter determines how quickly synapses change.